Last post <link> we got data all the way from a Raspberry Pi up to Amazon and back to the Dot and it was able to say, "The temperature is 79 degrees, " and we were excited. What, you got an error? How the heck do you troubleshoot this thing?

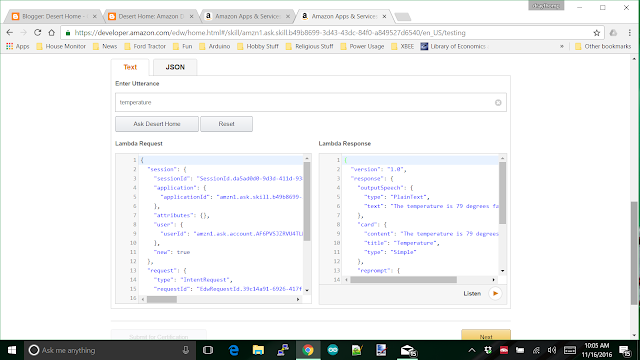

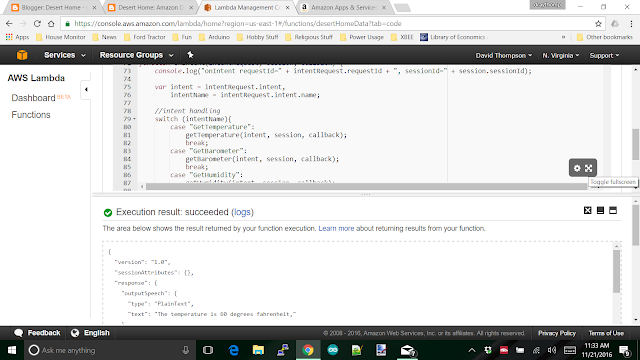

Fortunately, there actually is a way of doing this, even with the fact that they pass complex JSON strings around to carry data from one subsystem to another. The screen you got last time:

has the json string you need to test directly into the Lamba function we created in a previous post <link>. Simply copy the entire 'Lambda Request' and then paste it into the test screen for the Lambda function. I know this description leaves a lot of questions, so let's look a bit closer:

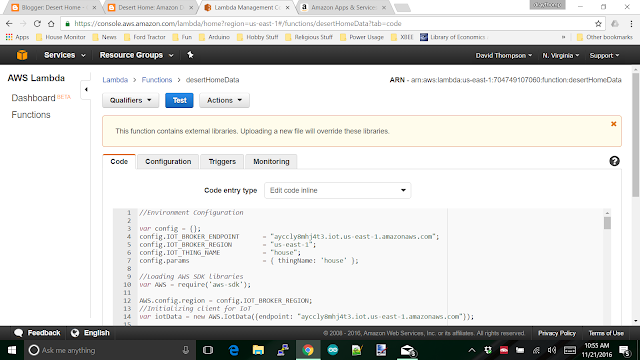

First copy the entire text from the Lambda Request shown above, then go to the Lambda Management Console:

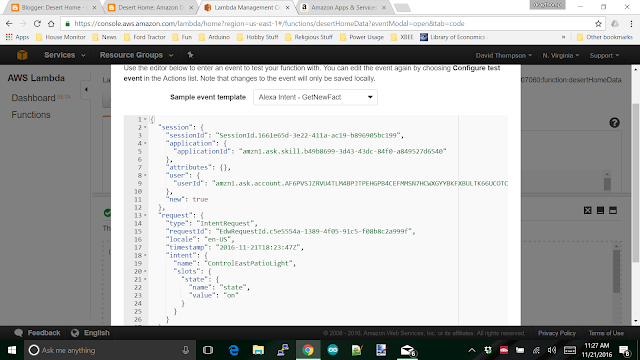

Then select Actions -> Configure Test Event

They don't have a blank test event or something really close to what you need, so just chooses the first one 'GetNextFact' and replace ALL the text inside the edit box with what you saved above. This will replace the default with what you want. Save it; you have to scroll down on the page to get the save button to show.

Now, you're ready to actually feed the JSON to your Lambda function. You can now press the 'Test' button and see what happens. I can't really be much help on specific errors because I can't know in advance what kinds of problems you'll hit, but there should be enough information to get you started.

You can also edit the test event you just created to test other things, I used one test event to test most of my information requests; things like 'temperature', 'rainfall', by just changing the intent name inside the JSON string.

Now you have some hope of being able to debug this thing, so let's walk through adding control of something from the Dot. This time I'm going to start with the voice interaction and work down to the Raspberry Pi at my house instead of the other way around. The reason for doing it this way is because we now have the basic infrastructure in place and adding things is easier from the Dot back to the Pi. We'll start at the Alexa Interaction Model which looks like this:

There needs to be an intent added and I'm using the one I added first to my setup. The intent is ControlEastPatioLight and it has two 'slots', on and off, so I named the type onOff. Very clever of me. Just below the intent window, I added a new slot called (duh) onOff:

And the contents of the slot is:

What's happening is that Alexa doesn't send the actual text that is spoken, it sends intents which may contain enumerations to the Lambda function. Enumerations are simply things that can be chosen from a pre-defined list. In this case the two slot values I chose were 'on' and 'off'. I could have used 0 and 1, or Fred and Mary, it doesn't matter what they are, what matters is that they make sense to you because you have to use them later in actually controlling your devices.

Now, for the utterances, I used the simplest ones I could think of:

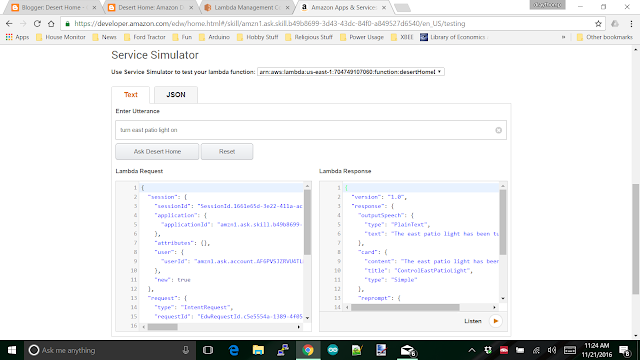

I highlighted the two above that I use for the light. The '{state}' refers back to the intent I created above and will be replaced with either on or off from the slot definition based on what you actually say to the Dot. Alexa actually parses through the sound and inserts its best guess about you said into the JSON string that will be sent to the Lambda function. So, save this work and go test it in the test screen:

Your Lambda Response will be an error because you haven't added the code yet, but the test generated the Lambda Request you'll need to test the new capability you're adding, so copy it out of the Request window and go over to the Lambda function and paste it into the test configuration before it gets lost:

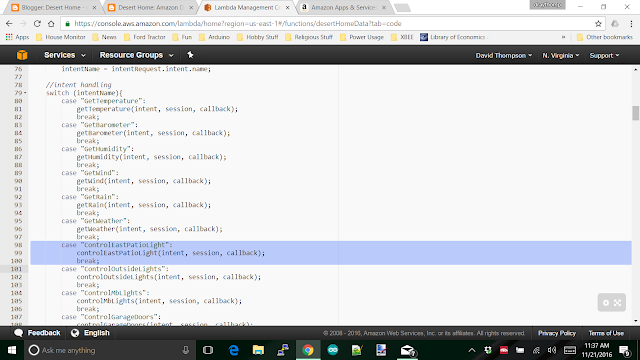

It's not ready to test yet since we don't have any code to support it, but save it now for uses later. We're going to add code now to support the new intent. In the code window which you will see after you save the event template you want to add the code. The window is small and hard to use, but you can scroll all the way down to the bottom of the little code window and there will be a button to expand it to full screen, I use this to edit since there just isn't enough visible to get proper context for changes:

The button is in the image above over on the right; I put the cursor there so you could find it. I searched for this for about 20 minutes the first time.

Add a couple of lines into the intent handling to get you to a routine that will format the mqtt request that the AWSIoT needs:

I highlighted the lines in the picture above. Now you need the code to create the mqtt request:

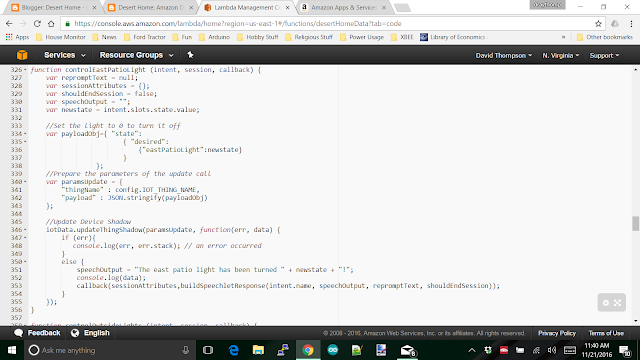

It just barely fit on the screen. Since you've already done this once, it should be easily recognized with the difference being the payload object and a call to updateThingShadow(). I think it's pretty intuitive what is going on here. A JSON fragment string is created with the content of the slot created above and passed off to the shadow as a 'desired' item. Now you're starting to understand why I described the operation of a 'Device Shadow' so carefully back a few posts ago <link>; the interaction of the shadow and the code on the Raspberry Pi is very important to getting this stuff working. I know how big a pain in the bottom it is to read from a post and try to type it in, so here's the code above you can copy and paste with:

Be sure to change the various names to something you'll understand later.

Once again, we don't actually send a command to the Pi to change something, we send a desire. That desire is pulled off the mqtt queue and used to do the actual change, then we publish back the new state. But, if you get confused, go back and read the explanation I linked to in the last paragraph.

That's it for right now, the next post has a bunch of code in it for the raspberry pi to get the desire and do something about it. It's going to get confusing to both read and for me to write, so next post.

Have fun, and feel free to work ahead, you can actually see the desire created and sent over in AWSIot if you want to.

Fortunately, there actually is a way of doing this, even with the fact that they pass complex JSON strings around to carry data from one subsystem to another. The screen you got last time:

has the json string you need to test directly into the Lamba function we created in a previous post <link>. Simply copy the entire 'Lambda Request' and then paste it into the test screen for the Lambda function. I know this description leaves a lot of questions, so let's look a bit closer:

First copy the entire text from the Lambda Request shown above, then go to the Lambda Management Console:

Then select Actions -> Configure Test Event

They don't have a blank test event or something really close to what you need, so just chooses the first one 'GetNextFact' and replace ALL the text inside the edit box with what you saved above. This will replace the default with what you want. Save it; you have to scroll down on the page to get the save button to show.

Now, you're ready to actually feed the JSON to your Lambda function. You can now press the 'Test' button and see what happens. I can't really be much help on specific errors because I can't know in advance what kinds of problems you'll hit, but there should be enough information to get you started.

You can also edit the test event you just created to test other things, I used one test event to test most of my information requests; things like 'temperature', 'rainfall', by just changing the intent name inside the JSON string.

Now you have some hope of being able to debug this thing, so let's walk through adding control of something from the Dot. This time I'm going to start with the voice interaction and work down to the Raspberry Pi at my house instead of the other way around. The reason for doing it this way is because we now have the basic infrastructure in place and adding things is easier from the Dot back to the Pi. We'll start at the Alexa Interaction Model which looks like this:

There needs to be an intent added and I'm using the one I added first to my setup. The intent is ControlEastPatioLight and it has two 'slots', on and off, so I named the type onOff. Very clever of me. Just below the intent window, I added a new slot called (duh) onOff:

And the contents of the slot is:

What's happening is that Alexa doesn't send the actual text that is spoken, it sends intents which may contain enumerations to the Lambda function. Enumerations are simply things that can be chosen from a pre-defined list. In this case the two slot values I chose were 'on' and 'off'. I could have used 0 and 1, or Fred and Mary, it doesn't matter what they are, what matters is that they make sense to you because you have to use them later in actually controlling your devices.

Now, for the utterances, I used the simplest ones I could think of:

I highlighted the two above that I use for the light. The '{state}' refers back to the intent I created above and will be replaced with either on or off from the slot definition based on what you actually say to the Dot. Alexa actually parses through the sound and inserts its best guess about you said into the JSON string that will be sent to the Lambda function. So, save this work and go test it in the test screen:

Your Lambda Response will be an error because you haven't added the code yet, but the test generated the Lambda Request you'll need to test the new capability you're adding, so copy it out of the Request window and go over to the Lambda function and paste it into the test configuration before it gets lost:

It's not ready to test yet since we don't have any code to support it, but save it now for uses later. We're going to add code now to support the new intent. In the code window which you will see after you save the event template you want to add the code. The window is small and hard to use, but you can scroll all the way down to the bottom of the little code window and there will be a button to expand it to full screen, I use this to edit since there just isn't enough visible to get proper context for changes:

The button is in the image above over on the right; I put the cursor there so you could find it. I searched for this for about 20 minutes the first time.

Add a couple of lines into the intent handling to get you to a routine that will format the mqtt request that the AWSIoT needs:

I highlighted the lines in the picture above. Now you need the code to create the mqtt request:

It just barely fit on the screen. Since you've already done this once, it should be easily recognized with the difference being the payload object and a call to updateThingShadow(). I think it's pretty intuitive what is going on here. A JSON fragment string is created with the content of the slot created above and passed off to the shadow as a 'desired' item. Now you're starting to understand why I described the operation of a 'Device Shadow' so carefully back a few posts ago <link>; the interaction of the shadow and the code on the Raspberry Pi is very important to getting this stuff working. I know how big a pain in the bottom it is to read from a post and try to type it in, so here's the code above you can copy and paste with:

function controlEastPatioLight (intent, session, callback) {

var repromptText = null;

var sessionAttributes = {};

var shouldEndSession = false;

var speechOutput = "";

var newstate = intent.slots.state.value;

//Set the light to 0 to turn it off

var payloadObj={ "state":

{ "desired":

{"eastPatioLight":newstate}

}

};

//Prepare the parameters of the update call

var paramsUpdate = {

"thingName" : config.IOT_THING_NAME,

"payload" : JSON.stringify(payloadObj)

};

//Update Device Shadow

iotData.updateThingShadow(paramsUpdate, function(err, data) {

if (err){

console.log(err, err.stack); // an error occurred

}

else {

speechOutput = "The east patio light has been turned " + newstate + "!";

console.log(data);

callback(sessionAttributes,buildSpeechletResponse(intent.name, speechOutput, repromptText, shouldEndSession));

}

});

}

var repromptText = null;

var sessionAttributes = {};

var shouldEndSession = false;

var speechOutput = "";

var newstate = intent.slots.state.value;

//Set the light to 0 to turn it off

var payloadObj={ "state":

{ "desired":

{"eastPatioLight":newstate}

}

};

//Prepare the parameters of the update call

var paramsUpdate = {

"thingName" : config.IOT_THING_NAME,

"payload" : JSON.stringify(payloadObj)

};

//Update Device Shadow

iotData.updateThingShadow(paramsUpdate, function(err, data) {

if (err){

console.log(err, err.stack); // an error occurred

}

else {

speechOutput = "The east patio light has been turned " + newstate + "!";

console.log(data);

callback(sessionAttributes,buildSpeechletResponse(intent.name, speechOutput, repromptText, shouldEndSession));

}

});

}

Be sure to change the various names to something you'll understand later.

Once again, we don't actually send a command to the Pi to change something, we send a desire. That desire is pulled off the mqtt queue and used to do the actual change, then we publish back the new state. But, if you get confused, go back and read the explanation I linked to in the last paragraph.

That's it for right now, the next post has a bunch of code in it for the raspberry pi to get the desire and do something about it. It's going to get confusing to both read and for me to write, so next post.

Have fun, and feel free to work ahead, you can actually see the desire created and sent over in AWSIot if you want to.