Last Post <link> we did one more of the seemingly interminable steps to voice control in our house, the Lambda function, but we couldn't test it because we didn't have the proper input for it. So, let's get into Alexa and create a voice service so we can actually start talking to it.

For those of you that jumped into the middle of this project, Alexa is the Amazon Voice Service that is the engine behind the Dot, Echo, that silly little switch they just came out with and probably more devices over time. It works pretty well and is actually pretty awesome for picking up voice in a house. I want it to control the items in the house with voice commands, and this is my latest attempt.

The really, really good news is that this is the last chunk of stuff we have to take on before we can get data from the house. We still have to work through the steps to send data, but all the major pieces will be there and we will get to just expand the capabilities.

So, sign into the Amazon Developer Console <link> and look up at the top black bar for 'Alexa' and click on it.

For those of you that jumped into the middle of this project, Alexa is the Amazon Voice Service that is the engine behind the Dot, Echo, that silly little switch they just came out with and probably more devices over time. It works pretty well and is actually pretty awesome for picking up voice in a house. I want it to control the items in the house with voice commands, and this is my latest attempt.

The really, really good news is that this is the last chunk of stuff we have to take on before we can get data from the house. We still have to work through the steps to send data, but all the major pieces will be there and we will get to just expand the capabilities.

So, sign into the Amazon Developer Console <link> and look up at the top black bar for 'Alexa' and click on it.

This is a tiny bit tricky because it looks like you want the 'Alexa Voice Service', but you really want the 'Alexa Skills Kit'. The voice service (in this case) is really meant for manufacturers to create interfaces for their devices. It's where folk like Belkin and Philips go to do things. If you have a hundred or so developers to work on this and relatively unlimited funds, by all means, go for it. If you're basically me, one guy trying to do something cool to have fun with, you definitely want the skills kit, so click on it.

This is what it looks like AFTER you create a skill, you want to 'Add a New Skill', which will take you to the next step:

Fill this in and click next. Remember, you want a 'Custom Interaction Model'. But, some advice on the 'Invocation Name', keep it short. Don't use something like, "Bill's really cool interface to the brown house at the corner," because you'll be saying this over, and over, and over. I used "Desert Home" and it's too darn long.

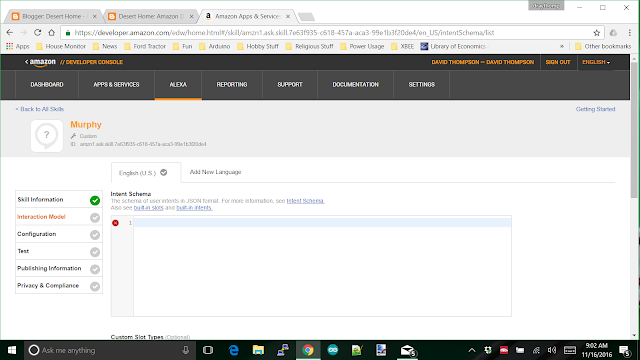

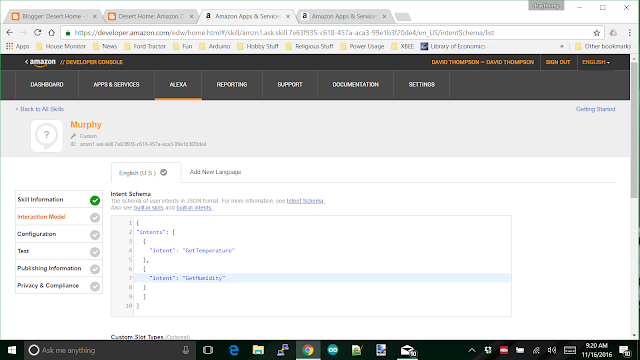

And here you are at the Interaction Model screen. Now you get to actually do some cool stuff. The top box is for a rather simple set of JSON (of course) strings, and the bottom is for 'utterance' that will use those JSON strings to create requests that will be spoon fed to the Lambda function we just created. Here's the intents for the Lambda function we created previously:

It's a JSON string with a table of 'intent' entries. Now we want to scroll down a bit to put in some utterances:

And 'Save' them. The save function will actually create the model which means you may get an error. So far, the errors I've had were relatively self-explanatory. Things like misspelling a word or forgetting a comma. Each of the lines of the utterances start off with one of the intents you defined in the first box and the rest of it are regular words that you speak to the Dot. The more of these utterance you put in, the smoother it seems to work when you use it. For now, just put a few in, you can add to them later as you see fit. When you get it saved; I mean really saved, and see the message above, click 'Next'.

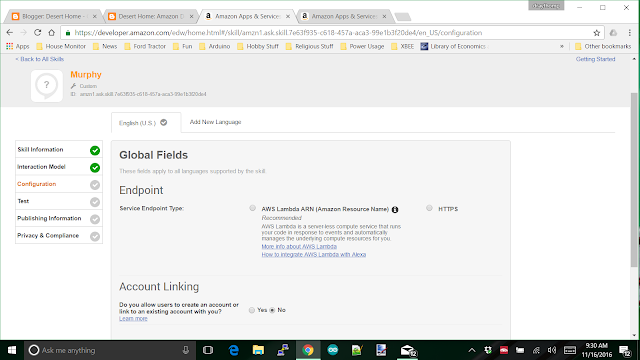

The 'Service Endpoint Type' is AWS Lambda of course because we went to all the trouble of creating a Lambda function for it, and you do not want users to link their accounts to this. Linking accounts is for selling services through Amazon. When you click on the Lamba radio button, it will open a couple of items you have to fill in:

Since Alexa only works in North America that button is obvious, the empty field is something to drive you nuts. Notice they don't give you a hint what they want in here? No help button, ... NOTHING!

What they want is the arn (Amazon resource number) of the Lambda we created last post, so go there and get it. Copy it out and paste it in here. I put mine in there so you could see what it looks like. Now, click 'Next'

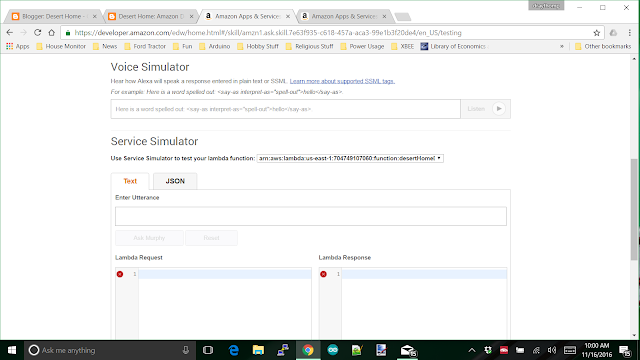

You're on the TEST SCREEN !! Finally, you get to do something and see something happen. Sure, we saw a little bit happen way back when we were messing with the mqtt interface to the AWSIot, but that was way back there. This time we get to actually try some voice related stuff. Whoo Hoo.

Just ignore the silly blue message about completing the interaction model. That message seems to always be there; click the enable button and watch the glorious test screen expand:

You get a voice simulator where you can type stuff in and have Alexa speak it back to you. There's a service simulator where you can type in text and Alexa will shape it into a JSON string that will be sent to the Lambda function, which will do whatever you told it to do and send back a response (or error) that you see in the other window. If it works, there's a button down the screen that will allow you to play the text from the response. Here's what one interaction (that actually worked) looks like:

The Lambda request window is where you can copy the JSON to use in testing the Lambda function directly, you just configure a test, copy that stuff in and go for it.

You DON'T want to publish this. If you publish it, you'll be allowing whoever hooks their Dot or Echo to this skill to mess with your house. It'll work just fine with your Dot, go give it a try once you work out whatever kinks that might have kicked in.

So, now we can get the temperature from our house by voice. Next we want to actually control something. That will mean changes to the voice model, the Lambda function and the code on our Pi.

Bet you can't wait for the next installment where I'm going to discuss a little bit about debugging the Lambda function and start down the path to changing something using voice commands to the house.

Have fun.