I was sitting on a bar stool showing off my Pi web server that controls my house and had to wait 15-20 seconds for each screen change. Loading graphs was painfully slow and would pause in the middle with only half the graph showing. It was embarrassing. When I got home, I took a look and the load average was up in the double digits; something I had never seen before. Obviously, there was some process or other out of control that needed to be fixed. I was wrong.

Granted, it's my oldest Pi; a Pi 1 that I just keep because it's easier than bringing up my Pi3B to do the same job. I recently put a SSD on it, and it logs to a database server up in the attic, so it's been fast enough. Now, it was just crawling along.

When I went looking for what was causing it I found someone out there on the web was loading my data as fast as the process could be run. Don't misunderstand, there's nothing secret there and folk visit my site all the time to see what's different from the last time, and I've had something similar happen before; it was quite innocent. What most people don't realize is that a site that automatically updates by doing a periodic get, when put in background, will continue to update. So, you visit one of the news sites, hit the back button down on the bottom of the phone, the app disappears and you go do something else, and the app continues to run updating the screen you can't see. This can cause data overages and such, but the app is ready when you come back. This shows up in my logs as someone on the site for a very long time.

Almost all sites are polite about this auto-update and only update on a multi-minute schedule; I have my site update every 10 seconds because I want to double check and see that the garage door actually closed like it was supposed to. What was happening was someone had set up a loop that would grab the data again as soon as it was delivered. That caused a lot of database read activity and slowed the machine down a LOT. Of course, I didn't realize this at first and assumed I needed to check the efficiency of the data gathering steps.

I have a php script that gathers the data from my database and returns it to the web user called housedata.php. It's a rather simple implementation, so I took it and started timing the various operations by commenting out pieces and timing it using the 'time' command in bash. The stupid little process was taking 4.75 seconds on average to finish at first, but after some database query changes, it went way down; but all that did was allow the person out there to call my machine faster.

I looked at excluding the person by IP address using the features of the apache2 web server and succeeded in stopping the interaction quite nicely. That made me think about what else might be going on, so I took a closer look at the logs. There was the usual script kiddies trash looking for 10 year old vulnerabilities, search engines prowling around, and days worth of this person beating on my machine. I had fixed the problem, so I improved the speed of housedata.php a little more and called it done. The next morning, the person was right back in there with a slightly different IP address doing the same thing.

I added the new address to the web server exclusions and noticed that it was in a subnet of the internet provider that was being used. Ha! I excluded the entire subnet to stop the problem. The problem with excluding the IP addresses with the web server was that the web server starts a process for each hit. That takes time and machine resources, not a lot, but enough to notice over time. It looked like it was time to actually bring up a firewall to protect the little machine.

I already knew about 'iptables', but have you ever tried to use that thing? It's really hard to set up, and I could mess it up pretty badly leaving holes where there shouldn't be and locking myself out of my own machine. I shuddered at trying to get that working without a months worth of research, but then discovered 'ufw' a tool designed to help with that process. I did the dreaded apt-get update command and then an apt-get install ufw so I could try it out. Notice that I did NOT use apt-get upgrade! I'm getting really tired of having too much stuff on my machine replaced by well meaning folk out there. The last time I did that I wound up with a new slightly incompatible operating system (jessie).

Since I run headless (no keyboard or console), I was afraid of actually enabling the firewall since it would exclude port 22 and I wouldn't be able to get into the machine to do anything without dragging it to a TV set somewhere and poking around for hours sitting on the floor in front of it. Fortunately, the folk that put the package together left a note in one of the configuration files about this very thing and I did what they suggested. Gritting my teeth in expectation of failure, I started the process and it warned me that ssh sessions could be interrupted and asked for confirmation. I gritted a little harder and answered 'Y'.

It came up just fine and didn't affect the ssh session at all. I was on my way.

If you have to do this, one thing you'll find annoying is the huge amount of introductions, tutorials, promotions, and examples out there on the web that don't tell you what you want to know. Sure they tell you stuff that is valuable, but I didn't find a single one that covered what I needed to do; it was all trial and error. Painful trial and error. After about an hour I came up with an idea: look at the darn log file created by ufw to see what was going on. On the Pi, the log records are mixed in with other stuff in the file /var/log/messages. So, I set up a way to watch it and see what was happening:

tail -f /var/log/messages | grep UFW

After watching a while for the various things that were being dropped, changing the configuration, watching some more, I got it working perfectly for my purposes. I had a little annoyance with the order of the rules. See, when ufw (actually iptables, ufw is just an interface) sees a packet, it steps through its rules in order and stops when it satisfies the first one. So if you allow access to port 80 as the first line, you can't exclude a specific IP address later; it already let the packet through and stopped looking. So, put the stuff you want stopped first and then the stuff you want to allow later.

I allowed all the machines on my local network to get to the web server for various things, but only open port 80 outside the house. Here's the list I'm currently using:

Also, since the person out there was getting time outs from not being able to get a response from my machine, its hits dropped to every 30 seconds or so. These hits were dropped at the protocol level, so they don't cause me any problems at all.

This rather busy screen shot shows the hits roughly every 30 seconds trying to load stuff, and each of them gets dropped with no response. The really annoying thing is that this person is STILL trying to get in. I've been working on this for a few days now and this robot doesn't have the smarts to try something else. I know it's a 'bot because it goes directly after the data server code without going through the web page. After I finished with the changes I let the machine run overnight and looked again, neither the web crawler or the annoying 'bot got in.

Success !

At least so far. Sure, I'll get annoying traffic again, but now I know how to stop it and have the means to do it relatively easily.

Don't misunderstand, I don't mind people looking at the site at all; I encourage folk to take a look. It's there not only so I can close my garage doors, but to serve as an example and source of ideas and suggestions. I just ask that you don't set up scripts to mess around with it for days at a time, and until now, everyone has been really nice about it. Some of my (ahem) ideas have come from people looking around and suggesting things. I've saved many a kid from a bad grade in a computer science class because they can steal code from me. And, there have been a couple of seriously interesting term papers written based on things they found here.

I'm actually contributing ... well sort of.

One last thing came out of this exercise. I had to put in a fake 'index.html' page because of the various 'bots that want to prowl around the site. What happens is the 'bot goes for the web site and then uses the URLs inside the index page to find other stuff on the site. Then it tries various 'exploits' to break in and do something bad on each of the pages. If there is no index page, it tries to get a directory listing and from there it starts messing around. If you put in a dead end index page, it can't get a directory listing and there are no URLs in the page to leverage from; the bot gives up and moves on. The script kiddies and the constant data loading came to the attention of the web monitoring tools at my ISP and they started expiring my IP lease a couple of times a day to break up the traffic. Each time they did that, I was off the network for a short time while the DNS servers were updated. I have code in place for this kind of thing, so I didn't have to do anything, but it got annoying. We'll see if my changes remove this problem.

All in all, this was both annoying and fun. I got to learn about new stuff, make changes that work really well to some of my code and have something new to brag about when I go to the bar.

Granted, it's my oldest Pi; a Pi 1 that I just keep because it's easier than bringing up my Pi3B to do the same job. I recently put a SSD on it, and it logs to a database server up in the attic, so it's been fast enough. Now, it was just crawling along.

When I went looking for what was causing it I found someone out there on the web was loading my data as fast as the process could be run. Don't misunderstand, there's nothing secret there and folk visit my site all the time to see what's different from the last time, and I've had something similar happen before; it was quite innocent. What most people don't realize is that a site that automatically updates by doing a periodic get, when put in background, will continue to update. So, you visit one of the news sites, hit the back button down on the bottom of the phone, the app disappears and you go do something else, and the app continues to run updating the screen you can't see. This can cause data overages and such, but the app is ready when you come back. This shows up in my logs as someone on the site for a very long time.

Almost all sites are polite about this auto-update and only update on a multi-minute schedule; I have my site update every 10 seconds because I want to double check and see that the garage door actually closed like it was supposed to. What was happening was someone had set up a loop that would grab the data again as soon as it was delivered. That caused a lot of database read activity and slowed the machine down a LOT. Of course, I didn't realize this at first and assumed I needed to check the efficiency of the data gathering steps.

I have a php script that gathers the data from my database and returns it to the web user called housedata.php. It's a rather simple implementation, so I took it and started timing the various operations by commenting out pieces and timing it using the 'time' command in bash. The stupid little process was taking 4.75 seconds on average to finish at first, but after some database query changes, it went way down; but all that did was allow the person out there to call my machine faster.

I looked at excluding the person by IP address using the features of the apache2 web server and succeeded in stopping the interaction quite nicely. That made me think about what else might be going on, so I took a closer look at the logs. There was the usual script kiddies trash looking for 10 year old vulnerabilities, search engines prowling around, and days worth of this person beating on my machine. I had fixed the problem, so I improved the speed of housedata.php a little more and called it done. The next morning, the person was right back in there with a slightly different IP address doing the same thing.

I added the new address to the web server exclusions and noticed that it was in a subnet of the internet provider that was being used. Ha! I excluded the entire subnet to stop the problem. The problem with excluding the IP addresses with the web server was that the web server starts a process for each hit. That takes time and machine resources, not a lot, but enough to notice over time. It looked like it was time to actually bring up a firewall to protect the little machine.

I already knew about 'iptables', but have you ever tried to use that thing? It's really hard to set up, and I could mess it up pretty badly leaving holes where there shouldn't be and locking myself out of my own machine. I shuddered at trying to get that working without a months worth of research, but then discovered 'ufw' a tool designed to help with that process. I did the dreaded apt-get update command and then an apt-get install ufw so I could try it out. Notice that I did NOT use apt-get upgrade! I'm getting really tired of having too much stuff on my machine replaced by well meaning folk out there. The last time I did that I wound up with a new slightly incompatible operating system (jessie).

Since I run headless (no keyboard or console), I was afraid of actually enabling the firewall since it would exclude port 22 and I wouldn't be able to get into the machine to do anything without dragging it to a TV set somewhere and poking around for hours sitting on the floor in front of it. Fortunately, the folk that put the package together left a note in one of the configuration files about this very thing and I did what they suggested. Gritting my teeth in expectation of failure, I started the process and it warned me that ssh sessions could be interrupted and asked for confirmation. I gritted a little harder and answered 'Y'.

It came up just fine and didn't affect the ssh session at all. I was on my way.

If you have to do this, one thing you'll find annoying is the huge amount of introductions, tutorials, promotions, and examples out there on the web that don't tell you what you want to know. Sure they tell you stuff that is valuable, but I didn't find a single one that covered what I needed to do; it was all trial and error. Painful trial and error. After about an hour I came up with an idea: look at the darn log file created by ufw to see what was going on. On the Pi, the log records are mixed in with other stuff in the file /var/log/messages. So, I set up a way to watch it and see what was happening:

tail -f /var/log/messages | grep UFW

After watching a while for the various things that were being dropped, changing the configuration, watching some more, I got it working perfectly for my purposes. I had a little annoyance with the order of the rules. See, when ufw (actually iptables, ufw is just an interface) sees a packet, it steps through its rules in order and stops when it satisfies the first one. So if you allow access to port 80 as the first line, you can't exclude a specific IP address later; it already let the packet through and stopped looking. So, put the stuff you want stopped first and then the stuff you want to allow later.

I allowed all the machines on my local network to get to the web server for various things, but only open port 80 outside the house. Here's the list I'm currently using:

pi@housemonitor:/var/log/apache2$ sudo ufw status numbered

Status: active

To Action From

-- ------ ----

[ 1] 22 ALLOW IN 192.168.0.0/16

[ 2] Anywhere DENY IN 69.145.122.0/24

[ 3] Anywhere DENY IN 180.16.15.0/24

[ 4] Anywhere DENY IN 180.76.15.0/24

[ 5] 80 ALLOW IN Anywhere

[ 6] 3551 ALLOW IN 192.168.0.0/16

[ 7] Samba4 ALLOW IN 192.168.0.0/16

[ 8] 224.0.0.251 ALLOW IN 192.168.0.0/24

[ 9] 224.0.0.1 ALLOW IN 192.168.0.0/24

[10] 22 ALLOW IN Anywhere (v6)

[11] 80 ALLOW IN Anywhere (v6)

[12] 3551 ALLOW IN Anywhere (v6)

pi@housemonitor:/var/log/apache2$

First, I allow port 22 from all internal addresses. Like I said, I worry about excluding my own access to the machine. Then a series of nets excluded because I saw them messing around. The 69.145.122.0/24 address is where the annoying traffic was coming from, the two addresses that start off with 180 are for a Chinese web crawler for a search engine. It was hitting my machine every 30 minutes from two different addresses that changed within that range. I don't mind search engines, but every 30 minutes? The IP version 6 stuff is the default, I haven't gotten to it yet.

Port 3551 is for my APC UPS that I wrote about recently. That device is working really well and controls the shutdown of all my Pi's so I want the machines to be able to interact. Of course I use Samba to move files around, so there's an entry for that. The two 224 addresses are for ARP and such, I put the entries in just to keep them out of the log.

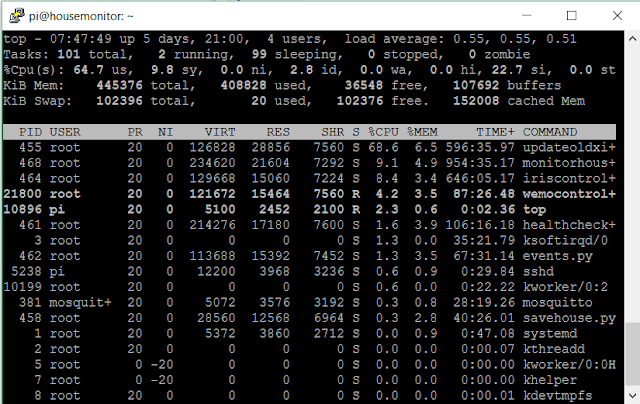

As soon as I did that, my load level dropped to fractional numbers; the machine finally had time to actually do things.

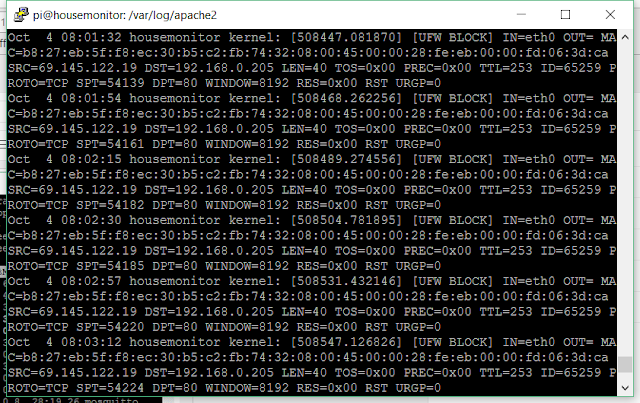

Also, since the person out there was getting time outs from not being able to get a response from my machine, its hits dropped to every 30 seconds or so. These hits were dropped at the protocol level, so they don't cause me any problems at all.

This rather busy screen shot shows the hits roughly every 30 seconds trying to load stuff, and each of them gets dropped with no response. The really annoying thing is that this person is STILL trying to get in. I've been working on this for a few days now and this robot doesn't have the smarts to try something else. I know it's a 'bot because it goes directly after the data server code without going through the web page. After I finished with the changes I let the machine run overnight and looked again, neither the web crawler or the annoying 'bot got in.

Success !

At least so far. Sure, I'll get annoying traffic again, but now I know how to stop it and have the means to do it relatively easily.

Don't misunderstand, I don't mind people looking at the site at all; I encourage folk to take a look. It's there not only so I can close my garage doors, but to serve as an example and source of ideas and suggestions. I just ask that you don't set up scripts to mess around with it for days at a time, and until now, everyone has been really nice about it. Some of my (ahem) ideas have come from people looking around and suggesting things. I've saved many a kid from a bad grade in a computer science class because they can steal code from me. And, there have been a couple of seriously interesting term papers written based on things they found here.

I'm actually contributing ... well sort of.

One last thing came out of this exercise. I had to put in a fake 'index.html' page because of the various 'bots that want to prowl around the site. What happens is the 'bot goes for the web site and then uses the URLs inside the index page to find other stuff on the site. Then it tries various 'exploits' to break in and do something bad on each of the pages. If there is no index page, it tries to get a directory listing and from there it starts messing around. If you put in a dead end index page, it can't get a directory listing and there are no URLs in the page to leverage from; the bot gives up and moves on. The script kiddies and the constant data loading came to the attention of the web monitoring tools at my ISP and they started expiring my IP lease a couple of times a day to break up the traffic. Each time they did that, I was off the network for a short time while the DNS servers were updated. I have code in place for this kind of thing, so I didn't have to do anything, but it got annoying. We'll see if my changes remove this problem.

All in all, this was both annoying and fun. I got to learn about new stuff, make changes that work really well to some of my code and have something new to brag about when I go to the bar.